FilesFM_Announcements (OP)

Copper Member

Member

Offline Offline

Activity: 224

Merit: 14

|

|

October 01, 2018, 09:19:20 AM |

|

The technological singularity, how far are we off from the 2045 date when this will all come together to the next leap in civilisation or that computers will be as smart as humans by 2029 - according to Ray Kurzweil

Whats your guys thoughts..

|

|

|

|

|

|

|

|

|

|

Advertised sites are not endorsed by the Bitcoin Forum. They may be unsafe, untrustworthy, or illegal in your jurisdiction.

|

|

|

|

|

|

|

af_newbie

Legendary

Offline Offline

Activity: 2688

Merit: 1468

|

|

October 01, 2018, 12:20:27 PM |

|

The technological singularity, how far are we off from the 2045 date when this will all come together to the next leap in civilisation or that computers will be as smart as humans by 2029 - according to Ray Kurzweil

Whats your guys thoughts..

I think progress in AI will not be linear but 2029 might be too optimistic. Quantum computing will play a role in AI achieving supremacy over humans. Humans are bad at processing large sets of data. People who are skeptical about AI do not understand AI. |

|

|

|

|

Moloch

|

|

October 01, 2018, 01:49:53 PM

Last edit: October 01, 2018, 02:00:38 PM by Moloch |

|

...computers will be as smart as humans by 2029

I hate to break it to you, but computers are already smarter than humans. Deep Blue beat the world chess champion in 1997. More recently, deep learning AI has been able to beat humans at Go, a more complex game than chess. https://en.wikipedia.org/wiki/Computer_Go#2015_onwards:_The_deep_learning_eraIn October 2015, Google DeepMind program AlphaGo beat Fan Hui, the European Go champion, five times out of five in tournament conditions.

In March 2016, AlphaGo beat Lee Sedol in the first three of five matches.

In May 2017, AlphaGo beat Ke Jie, who at the time was ranked top in the world, in a three-game match during the Future of Go Summit.

In October 2017, DeepMind revealed a new version of AlphaGo, trained only through self play, that had surpassed all previous versions, beating the Ke Jie version in 89 out of 100 games.

A well-designed AI is better than a human at nearly anything these days, but everything is very specific. AlphaGo is designed to play a game called Go, it would be horrible at doing anything else. I assume the dates of 2029 and 2045 are guesses to when AI will achieve consciousness. I would guess closer to 50 years from now myself, maybe 2070. Mostly because science has no idea where consciousness comes from. A programmer can create a neural-net that acts like a brain, but, how do you give it a consciousness and free will? Your consciousness is not the brain itself, but rather "the watcher" or "the decider", aka "the man behind the curtain"... how do you give such an aspect to a computer? Some people think that once a neural network becomes large enough it could become self-aware spontaneously. If this happened it could be a lot sooner than 50 years. Though, I don't see how you could even tell it was self-aware unless it had the ability to reprogram itself. A self-aware AI with the ability to reprogram itself would evolve faster than people could keep up with it. Soon we would not understand it's code, even if it allowed us to view it. This sounds like the "singularity" you refer to. You can't put that genie back in the bottle once it is out... |

|

|

|

|

|

criza

|

|

October 01, 2018, 02:16:30 PM |

|

Computers will not be as smarter as humans are because they already are. Computers were far more capable of doing things conveniently and easier than humans. They are faster and more accurate in doing calculations and alike. They are more complex when it comes the ability do work on things on a versatile and extraordinary manner. However, despite the computers' more advantages than the humans, we cannot deny the fact that computers were created by humans, and there can be no computers without the existence of human minds. By this, we can imply that human minds gets developed naturally without the programming of others while computer functions cannot increase when not programmed by the humans.

|

|

|

|

Spendulus

Legendary

Offline Offline

Activity: 2898

Merit: 1386

|

|

October 01, 2018, 02:17:09 PM |

|

The technological singularity, how far are we off from the 2045 date when this will all come together....

I don't know. I will ask Google. |

|

|

|

|

|

bluefirecorp_

|

|

October 01, 2018, 02:21:17 PM |

|

As soon as artificial general intelligence (AGI) is created, technological singularity is only weeks away.

The difficulty lies in creating the general artificial intelligence. Application specific intelligence is a lot easier than general intelligence.

|

|

|

|

SneakyLady

Jr. Member

Offline Offline

Activity: 114

Merit: 2

|

|

October 01, 2018, 08:15:42 PM |

|

We're going to join AI's capabilities with things like Musk's Neuralinks implanted in our minds within the next ten years. This will potentially be used as mind uploading tech (brain scan)-allowing us to join the cloud and remain immortal in the virtual space.

We're already getting acquainted with AI in our daily life thanks to things like virtual agents as of now..

|

|

|

|

|

Instely

Jr. Member

Offline Offline

Activity: 56

Merit: 9

|

I would guess closer to 50 years from now myself, maybe 2070. Mostly because science has no idea where consciousness comes from. A programmer can create a neural-net that acts like a brain, but, how do you give it a consciousness and free will?

Your consciousness is not the brain itself, but rather "the watcher" or "the decider", aka "the man behind the curtain"... how do you give such an aspect to a computer?

Some people think that once a neural network becomes large enough it could become self-aware spontaneously. If this happened it could be a lot sooner than 50 years. Though, I don't see how you could even tell it was self-aware unless it had the ability to reprogram itself.

A self-aware AI with the ability to reprogram itself would evolve faster than people could keep up with it. Soon we would not understand it's code, even if it allowed us to view it. This sounds like the "singularity" you refer to. You can't put that genie back in the bottle once it is out...

You are touching directly the important point here, the theme of consciousness and how far we are from creating not artificial intelligence (because it has been already created) but artificial CONSCIOUSNESS, which basically means: A mechanism that is able to reflect on it's own condition and capable of learning and re-organizing itself to overcome its obstacles. That's the real phylosophycal deal in this field, which has been explored by Phillip K. Dick in his novel called "Do androids dream of electrical sheeps?" and in a Ridley Scott movie called "Blade Runner" which is an adaptation of the mentioned novel. I like the position that Ben Goertzel (Probably the greatest IA developer in history) takes: He sees consciousness not as a consequence of a complex interconnected web of neurons but as the most fundamental 'substance' by which all of reality is made. For he, it doesn't depends on if a robot has consciousness or not, because he believes that everything we see, touch and experience is just an expression of (the fundamental) consciousness, just in different degrees and shapes. So everything we can perceive is made of the same consciousness and because of that, EVERYTHING has consciousness. (If you want to listen him talking about this specific topic watch this video at minute 9:12 https://www.youtube.com/watch?v=owppju3jwPE&t=515sIt may sound crazy from a materialistic/traditional science point of view, but even the most advanced scientific understanding that we have nowadays (which is Quantum Mechanics) may prove that there are evidence of it being totally possible. |

Join the Blockchain Revolution with Chainzilla! - https://discordapp.com/invite/rhkgTrv

|

|

|

Spendulus

Legendary

Offline Offline

Activity: 2898

Merit: 1386

|

|

October 01, 2018, 09:36:36 PM |

|

....everything we can perceive is made of the same consciousness and because of that, EVERYTHING has consciousness. (If you want to listen him talking about this specific topic watch this video at minute 9:12 https://www.youtube.com/watch?v=owppju3jwPE&t=515sIt may sound crazy from a materialistic/traditional science point of view, but even the most advanced scientific understanding that we have nowadays (which is Quantum Mechanics) may prove that there are evidence of it being totally possible. My consciousness says your consciousness is wrong, and what my consciousness knows about QM says there's zero relationship between QM principles or theory and consciousness. But it's worth noting that if Ai develops consciousness here in 50 years or less, then it has numerous other places in the universe a long time ago. |

|

|

|

|

FilesFM_Announcements (OP)

Copper Member

Member

Offline Offline

Activity: 224

Merit: 14

|

|

October 02, 2018, 09:09:09 AM |

|

I read a few years ago that we put 300,000 rat neurons into a robot and out of pure boredom the robot started doing things on its own accord.. consciousness in some form was born.. can't we lift the morel dilemma about what is defined as 'life' and start really exploring deeply what happens if we put 30,000,000 human neurons into a robot. HYBROT Wont organic neuron's, and bionic CPU's and programmable DNA be the best way to spawn Ai and its artificial general intelligence (AGI)/Consciousness .. and in turn the question/concerns about how will Ai will react to us.. will be that we are nothing more than the antiquated ancestors of the next organic/semiconductor evolutionary step. Surely that puts to rest the fears of AGI being our demise. |

|

|

|

af_newbie

Legendary

Offline Offline

Activity: 2688

Merit: 1468

|

|

October 02, 2018, 03:41:15 PM |

|

I read a few years ago that we put 300,000 rat neurons into a robot and out of pure boredom the robot started doing things on its own accord.. consciousness in some form was born.. can't we lift the morel dilemma about what is defined as 'life' and start really exploring deeply what happens if we put 30,000,000 human neurons into a robot. HYBROT Wont organic neuron's, and bionic CPU's and programmable DNA be the best way to spawn Ai and its artificial general intelligence (AGI)/Consciousness .. and in turn the question/concerns about how will Ai will react to us.. will be that we are nothing more than the antiquated ancestors of the next organic/semiconductor evolutionary step. Surely that puts to rest the fears of AGI being our demise. We have already passed the 'A life' stage. https://www.youtube.com/watch?v=M5gIKn7mc2U |

|

|

|

TECSHARE

In memoriam

Legendary

Offline Offline

Activity: 3318

Merit: 1958

First Exclusion Ever

|

|

October 02, 2018, 05:00:43 PM |

|

We have already reached the singularity, just none of is have clearance to know about it.

|

|

|

|

|

theymos

Administrator

Legendary

Offline Offline

Activity: 5166

Merit: 12864

|

|

October 02, 2018, 10:53:46 PM |

|

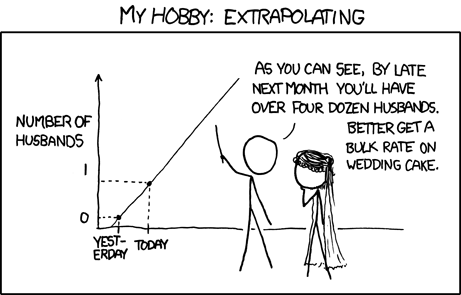

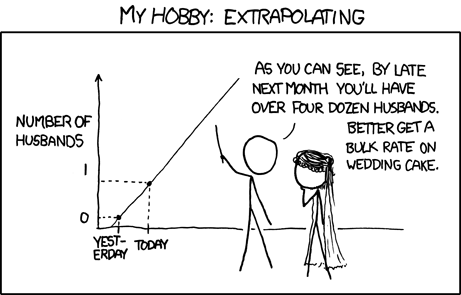

I read Kurzweil's book several years ago, and it was really stupid. It's the extrapolation fallacy in book form:  He seems to believe that progress just happens regardless of everything else. In reality, progress happens because people make it happen, and it can be stopped if we hit a wall in research or if society changes to no longer allow for effective/useful scientific progress. If human-level AI is created on traditional computing systems, then a singularity-like explosion of technology seems likely. There are existential risks there, but also potentially ~infinite benefit. But I'm not convinced that we're close to human-level AI. Deep neural networks can do some impressive things, but they don't actually think or plan: they're like a very effective form of intuition. I don't think that we will find human-level AI at the end of that road. In the worst-case scenario we should eventually be able to completely map out the human brain and simulate it on a computer, but that'll be many decades into the future at least. Many leftists have a habit of thinking that since progress continues continuously and exponentially, we should just assume post-scarcity any day now. Which is exactly how you damage society and the economy so badly that you stop all progress completely... |

1NXYoJ5xU91Jp83XfVMHwwTUyZFK64BoAD

|

|

|

Spendulus

Legendary

Offline Offline

Activity: 2898

Merit: 1386

|

|

October 02, 2018, 11:24:03 PM |

|

I read Kurzweil's book several years ago, and it was really stupid. It's the extrapolation fallacy in book form:  He seems to believe that progress just happens regardless of everything else. In reality, progress happens because people make it happen, and it can be stopped if we hit a wall in research or if society changes to no longer allow for effective/useful scientific progress. If human-level AI is created on traditional computing systems, then a singularity-like explosion of technology seems likely. There are existential risks there, but also potentially ~infinite benefit. But I'm not convinced that we're close to human-level AI. Deep neural networks can do some impressive things, but they don't actually think or plan: they're like a very effective form of intuition. I don't think that we will find human-level AI at the end of that road. In the worst-case scenario we should eventually be able to completely map out the human brain and simulate it on a computer, but that'll be many decades into the future at least. Many leftists have a habit of thinking that since progress continues continuously and exponentially, we should just assume post-scarcity any day now. Which is exactly how you damage society and the economy so badly that you stop all progress completely... These are very good points, but note the entire discussion is about "the time frame." Suppose instead of 50 years, you look at the next 500 years .... |

|

|

|

|

FilesFM_Announcements (OP)

Copper Member

Member

Offline Offline

Activity: 224

Merit: 14

|

|

October 03, 2018, 05:44:29 AM |

|

Come on 'Sophia' is a gimmick, its similar to rulebase programming, the old days where you ask a technician questions about your car or PC, and they go thru a set of pre-determined answers, the only difference here is MAYBE Sophia can choose the which answer from the set of pre-defined answers can be chosen... but this isn't AI anybody who asks Sophia a question always have a piece of paper in-front of them when asking questions. |

|

|

|

Spendulus

Legendary

Offline Offline

Activity: 2898

Merit: 1386

|

|

October 03, 2018, 02:19:34 PM |

|

I read Kurzweil's book several years ago, and it was really stupid. It's the extrapolation fallacy in book form: ..... leftists have a habit of thinking that since progress continues continuously and exponentially, we should just assume post-scarcity any day now. Which is exactly how you damage society and the economy so badly that you stop all progress completely...

Whether in human or machine form, we're certainly NOT SEEING an exponential extrapolation of intelligence. |

|

|

|

|

FilesFM_Announcements (OP)

Copper Member

Member

Offline Offline

Activity: 224

Merit: 14

|

|

October 04, 2018, 02:44:06 PM |

|

I read Kurzweil's book several years ago, and it was really stupid. It's the extrapolation fallacy in book form: ..... leftists have a habit of thinking that since progress continues continuously and exponentially, we should just assume post-scarcity any day now. Which is exactly how you damage society and the economy so badly that you stop all progress completely...

Whether in human or machine form, we're certainly NOT SEEING an exponential extrapolation of intelligence. I sadly have to temper my opinions about a particular group of people influencing our world today manipulating media, TV- Movies, academia, politics and our fragile financial systems.. Perhaps once we 'wakeup' from the grips of this particular group.. things will look more positively. On a lighter note; anybody read 'Who Owns the Future by Jaron Lanier' ? https://en.wikipedia.org/wiki/Who_Owns_the_Future%3F |

|

|

|

Spendulus

Legendary

Offline Offline

Activity: 2898

Merit: 1386

|

|

October 04, 2018, 10:19:51 PM |

|

I read Kurzweil's book several years ago, and it was really stupid. It's the extrapolation fallacy in book form: ..... leftists have a habit of thinking that since progress continues continuously and exponentially, we should just assume post-scarcity any day now. Which is exactly how you damage society and the economy so badly that you stop all progress completely...

Whether in human or machine form, we're certainly NOT SEEING an exponential extrapolation of intelligence. I sadly have to temper my opinions about a particular group of people influencing our world today manipulating media, TV- Movies, academia, politics and our fragile financial systems.. Perhaps once we 'wakeup' from the grips of this particular group.. things will look more positively. On a lighter note; anybody read 'Who Owns the Future by Jaron Lanier' ? https://en.wikipedia.org/wiki/Who_Owns_the_Future%3FThe wikipedia article on tech sing is very good also. https://en.wikipedia.org/wiki/Technological_singularity |

|

|

|

|

Green_Bulb

Jr. Member

Offline Offline

Activity: 261

Merit: 3

|

|

October 05, 2018, 06:48:34 AM |

|

That's the real phylosophycal deal in this field, which has been explored by Phillip K. Dick in his novel called "Do androids dream of electrical sheeps?" and in a Ridley Scott movie called "Blade Runner" which is an adaptation of the mentioned novel.

I`m afraid the scenario of "I have no mouth, and I must scream" by Harlan Ellison is more likely to happen. Governments will search for ways to weaponize AI for the seemingly just cause of the national security. What if then the AI decides, that the best way to ensure said security is to nuke the others? |

|

|

|

|

FilesFM_Announcements (OP)

Copper Member

Member

Offline Offline

Activity: 224

Merit: 14

|

|

October 05, 2018, 01:23:50 PM

Last edit: October 05, 2018, 02:21:37 PM by FilesFM_Announcements |

|

That's the real phylosophycal deal in this field, which has been explored by Phillip K. Dick in his novel called "Do androids dream of electrical sheeps?" and in a Ridley Scott movie called "Blade Runner" which is an adaptation of the mentioned novel.

I`m afraid the scenario of "I have no mouth, and I must scream" by Harlan Ellison is more likely to happen. Governments will search for ways to weaponize AI for the seemingly just cause of the national security. What if then the AI decides, that the best way to ensure said security is to nuke the others? What a scary thought! logically I thought the nukes have always required 2 keys, two presses of the button.. as a way to stop the idea of one lone idiot causing WW3... I think will be the same with AI implementation, it will require some human intervention too for making such decisions. I know killer AI is being explored but thats more on the battlefield 1:1 type situations. |

|

|

|

|