brainless

Member

Offline Offline

Activity: 316

Merit: 34

|

|

June 15, 2020, 07:53:31 PM |

|

Thanks for the help. I was able to compile a file for your clients. The program helped fix the extension as needed bat and txt. I started it after 3 minutes at 1050 and got an answer in 65save.txt, but I could not read everything there in hieroglyphs. Help explain how further please.

download and install emeditor right click file and open with emeditor, there is all option and format for open file |

13sXkWqtivcMtNGQpskD78iqsgVy9hcHLF

|

|

|

|

|

|

If you want to be a moderator, report many posts with accuracy. You will be noticed.

|

|

|

Advertised sites are not endorsed by the Bitcoin Forum. They may be unsafe, untrustworthy, or illegal in your jurisdiction.

|

|

|

|

|

COBRAS

Member

Offline Offline

Activity: 828

Merit: 20

$$P2P BTC BRUTE.JOIN NOW ! https://uclck.me/SQPJk

|

|

June 15, 2020, 07:56:04 PM |

|

A dead kangaroo means there was a collision inside the same herd, either two wilds or two tames collided. The program automatically replaces the dead kangaroo with another kangaroo. so no worries.

Thank you for your reply. How to use this information - about dead kangaroo ? I have only 1 pubkey from 10 with kabgaroo - dead kangaroo ? And I was stop calclulation pubkey with dead kangaroo because max operation count was max. What i need to do for pubey with dead kangaroo ? - make more bytes search range or ? ? ? Thx. |

|

|

|

WanderingPhilospher

Full Member

Offline Offline

Activity: 1050

Merit: 219

Shooters Shoot...

|

|

June 15, 2020, 08:01:06 PM |

|

A dead kangaroo means there was a collision inside the same herd, either two wilds or two tames collided. The program automatically replaces the dead kangaroo with another kangaroo. so no worries.

Thank you for your reply. How to use this information - about dead kangaroo ? I have only 1 pubkey from 10 with kabgaroo - dead kangaroo ? And I was stop calclulation pubkey with dead kangaroo because max operation count was max. What i need to do for pubey with dead kangaroo ? - make more bytes search range or ? ? ? Thx. How big of a range are you searching? 1 dead kangaroo is nothing, really. If pubkey isn't in the range you were searching, then yes, I'd search a different range. If it's a small enough range, use BSGS to make sure the pubkey isn't in the range. |

|

|

|

|

arulbero

Legendary

Offline Offline

Activity: 1914

Merit: 2071

|

|

June 15, 2020, 08:04:10 PM |

|

To recap (and to return in topic):

#115 -> 114 bit

steps needed to have 50% chance of a collision: about (114/2)+1 = 58 bit -> 2^58

DP = 25

steps performed: 2**25 * 2**33.14 = 2**58.14, then you are close to the result?

I expect a result at any time. You had to add ~ 5% tolerance limit due to inconsistency in ~ 00.05% files, so in my case from 2 ^ 33.167 gives 52%. I have already crossed 2 ^ 33.18 just so it is exactly as you wrote :-) Any news? It may take more time finding #115 than finding #120 ... |

|

|

|

|

|

zielar

|

|

June 15, 2020, 08:26:59 PM |

|

To recap (and to return in topic):

#115 -> 114 bit

steps needed to have 50% chance of a collision: about (114/2)+1 = 58 bit -> 2^58

DP = 25

steps performed: 2**25 * 2**33.14 = 2**58.14, then you are close to the result?

I expect a result at any time. You had to add ~ 5% tolerance limit due to inconsistency in ~ 00.05% files, so in my case from 2 ^ 33.167 gives 52%. I have already crossed 2 ^ 33.18 just so it is exactly as you wrote :-) Any news? It may take more time finding #115 than finding #120 ... In fact - at the current # 115 level - at all costs everything tries to prevent it from finding the key - from hardware - data loss and tedious restore due to a storm - by placing it ... - nevertheless, together with Jean_Luc_Pons managed to rebuild and improve this arduous process and process continues. This gave a lot of lessons in preparation for # 120. What I will say more - that the 50% threshold was already exceeded when I mentioned it recently. Over the next hour I will give you the exact statistics from the current situation. It is certain, however, that this time is not as lucky as before and the work is still in progress. |

If you want - you can send me a donation to my BTC wallet address 31hgbukdkehcuxcedchkdbsrygegyefbvd

|

|

|

|

MrFreeDragon

|

|

June 15, 2020, 09:19:55 PM |

|

-snip-

In fact - at the current # 115 level - at all costs everything tries to prevent it from finding the key - from hardware - data loss and tedious restore due to a storm - by placing it ... - nevertheless, together with Jean_Luc_Pons managed to rebuild and improve this arduous process and process continues. This gave a lot of lessons in preparation for # 120.

What I will say more - that the 50% threshold was already exceeded when I mentioned it recently. Over the next hour I will give you the exact statistics from the current situation. It is certain, however, that this time is not as lucky as before and the work is still in progress.

Did you implement the feedback from server to clients? If no - that means that the clients continue to perform "useless work" moving the dead "zombi" kangaroos: If you have let's say 100 different machines working independent, you reproduce only the dead kangaroos found within one machine only (machine 1 reproduce dead kangaroos only found by itself). After merging all these 100 files on the server, the server finds a lot of dead kangaroos however does not send back signal to clients to reproduce them. Clients continue working with that "zombi" because they do not have feedback from the server. That also means that during the next merging the server will receive again the same kangaroos, the server will kill them again, but the "zombies" will continue their jumps on client side. And so on. This could cause the very inefficient work for wider ranges like #115 or more. |

|

|

|

brainless

Member

Offline Offline

Activity: 316

Merit: 34

|

|

June 15, 2020, 09:26:29 PM |

|

-snip-

In fact - at the current # 115 level - at all costs everything tries to prevent it from finding the key - from hardware - data loss and tedious restore due to a storm - by placing it ... - nevertheless, together with Jean_Luc_Pons managed to rebuild and improve this arduous process and process continues. This gave a lot of lessons in preparation for # 120.

What I will say more - that the 50% threshold was already exceeded when I mentioned it recently. Over the next hour I will give you the exact statistics from the current situation. It is certain, however, that this time is not as lucky as before and the work is still in progress.

Did you implement the feedback from server to clients? If no - that means that the clients continue to perform "useless work" moving the dead "zombi" kangaroos: If you have let's say 100 different machines working independent, you reproduce only the dead kangaroos found within one machine only (machine 1 reproduce dead kangaroos only found by itself). After merging all these 100 files on the server, the server finds a lot of dead kangaroos however does not send back signal to clients to reproduce them. Clients continue working with that "zombi" because they do not have feedback from the server. That also means that during the next merging the server will receive again the same kangaroos, the server will kill them again, but the "zombies" will continue their jumps on client side. And so on. This could cause the very inefficient work for wider ranges like #115 or more. if jean luc created multi pubkey ver in time, maybe the job for 115 is only for 1 day with zieler gpus at 85bit range anyway they had agreemnet, and cant think beyond thier creation+GPU's |

13sXkWqtivcMtNGQpskD78iqsgVy9hcHLF

|

|

|

|

zielar

|

|

June 15, 2020, 09:28:50 PM |

|

-snip-

In fact - at the current # 115 level - at all costs everything tries to prevent it from finding the key - from hardware - data loss and tedious restore due to a storm - by placing it ... - nevertheless, together with Jean_Luc_Pons managed to rebuild and improve this arduous process and process continues. This gave a lot of lessons in preparation for # 120.

What I will say more - that the 50% threshold was already exceeded when I mentioned it recently. Over the next hour I will give you the exact statistics from the current situation. It is certain, however, that this time is not as lucky as before and the work is still in progress.

Did you implement the feedback from server to clients? If no - that means that the clients continue to perform "useless work" moving the dead "zombi" kangaroos: If you have let's say 100 different machines working independent, you reproduce only the dead kangaroos found within one machine only (machine 1 reproduce dead kangaroos only found by itself). After merging all these 100 files on the server, the server finds a lot of dead kangaroos however does not send back signal to clients to reproduce them. Clients continue working with that "zombi" because they do not have feedback from the server. That also means that during the next merging the server will receive again the same kangaroos, the server will kill them again, but the "zombies" will continue their jumps on client side. And so on. This could cause the very inefficient work for wider ranges like #115 or more. I don't have any implementations that are not in the latest version of Kangaroo. As a curiosity I will add that so far - I still have NO dead kangaroos! |

If you want - you can send me a donation to my BTC wallet address 31hgbukdkehcuxcedchkdbsrygegyefbvd

|

|

|

|

dextronomous

|

|

June 16, 2020, 12:50:27 AM |

|

hi there zielar, was and guess we are all waiting for your exact statistics,

and was wondering could we enable some more stats inside kangaroo like current sampled or checked collisions,

at long waiting times these could help maybe somewhat to skip time, like smilies or so or a percentage counter,

or anything extra then current like some advancement inside kang. would be delightfull.

greets.

|

|

|

|

|

|

zielar

|

|

June 16, 2020, 01:09:52 AM |

|

hi there zielar, was and guess we are all waiting for your exact statistics,

and was wondering could we enable some more stats inside kangaroo like current sampled or checked collisions,

at long waiting times these could help maybe somewhat to skip time, like smilies or so or a percentage counter,

or anything extra then current like some advancement inside kang. would be delightfull.

greets.

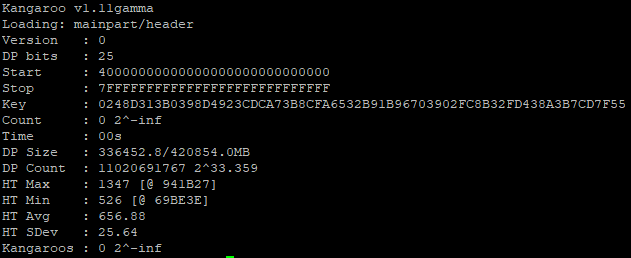

From what I know, there will be progress soon. The statistics arrive so late, because I have completed the last files and at the moment I am sure that it contains all the work that was intended for 115:  |

If you want - you can send me a donation to my BTC wallet address 31hgbukdkehcuxcedchkdbsrygegyefbvd

|

|

|

JDScreesh

Jr. Member

Offline Offline

Activity: 39

Merit: 12

|

|

June 16, 2020, 07:50:35 AM |

|

And finaly the #115 was found. Congratulations Zielar and Jean_Luc_Pons  We are all wondering which was the privkey  |

|

|

|

|

|

Jean_Luc (OP)

|

|

June 16, 2020, 08:00:26 AM |

|

We (me and zielar) solved #115 after ~2^33.36 DP (DP25) (More than 300GB of DP). I do not know exactly how long the run takes due to unwanted interruption.  |

|

|

|

|

JDScreesh

Jr. Member

Offline Offline

Activity: 39

Merit: 12

|

|

June 16, 2020, 08:06:50 AM |

|

We (me and zielar) solved #115 after ~2^33.36 DP (DP25) (More than 300GB of DP). I do not know exactly how long the run takes due to unwanted interruption.  Congratulations for breaking the World Record too. Good job  The last world record was 112 bits, right?  |

|

|

|

|

brainless

Member

Offline Offline

Activity: 316

Merit: 34

|

|

June 16, 2020, 08:12:59 AM |

|

We (me and zielar) solved #115 after ~2^33.36 DP (DP25) (More than 300GB of DP). I do not know exactly how long the run takes due to unwanted interruption.  Congratulations did you forget to send some satoshi to 1FoundByJLPKangaroo111Bht3rtyBav8 |

13sXkWqtivcMtNGQpskD78iqsgVy9hcHLF

|

|

|

|

Jean_Luc (OP)

|

|

June 16, 2020, 08:17:02 AM

Last edit: June 16, 2020, 08:44:58 AM by Jean_Luc |

|

Congratulations for breaking the World Record too. Good job  The last world record was 112 bits, right?  Thanks  The world record on standard architecture was also 114 bit but on a 114 bit field. Here we have solved a 114bit key on a 256bit field. So yes, we broke the world record on classic architecture  The world record on FPGA is 117.25 bit, it was done using up to 576 FPGA during 6 months. With our architecture, we expect 2 months on 256 V100 to solve #120 (119bit keys), we will see if we will attack it. Yes I will send the satoshi to 1FoundByJLPKangaroo... but before I'm waiting Zielar who is offline at the moment. We will publish the private in a while when altcoin will also be moved. |

|

|

|

|

brainless

Member

Offline Offline

Activity: 316

Merit: 34

|

|

June 16, 2020, 09:18:13 AM |

|

Congratulations for breaking the World Record too. Good job  The last world record was 112 bits, right?  Thanks  The world record on standard architecture was also 114 bit but on a 114 bit field. Here we have solved a 114bit key on a 256bit field. So yes, we broke the world record on classic architecture  The world record on FPGA is 117.25 bit, it was done using up to 576 FPGA during 6 months. With our architecture, we expect 2 months on 256 V100 to solve #120 (119bit keys), we will see if we will attack it. Yes I will send the satoshi to 1FoundByJLPKangaroo... but before I'm waiting Zielar who is offline at the moment. We will publish the private in a while when altcoin will also be moved. as now you have work file for 115 bit, can we apply an other 115 bit pubkey for check inside your 300gb workfile, and what aspected time to find solution ? |

13sXkWqtivcMtNGQpskD78iqsgVy9hcHLF

|

|

|

supika

Newbie

Offline Offline

Activity: 43

Merit: 0

|

|

June 16, 2020, 09:33:56 AM |

|

All the other mortals are spectators at the show created by Zielar and Jean Luc. I like the show. The show must go on  |

|

|

|

|

|

Jean_Luc (OP)

|

|

June 16, 2020, 09:41:37 AM |

|

Unfortunately I don't see how to reuse the work file on a different range.

This work file is for [2^114,2^115-1].

If you translate the kangaroos, the paths will differ so you have to redo all the job.

There was an interesting question in a previous message of this topic:

Why paths are important and why not adding DP without computing paths ?

Using only DP:

It is like drawing a random number in a reduced space N/2^dpbit so time to solve will be O( sqrt(N/2^dpbit) )

However, it is true, that you can reach a DP faster in this way by computing consecutive points.

Using paths:

It is like drawing a bunch of 2^dpbit random points at each DP, so time to solve is O( sqrt(N)/2^dpbit )

The "gain" using path is 2^dpbit while without paths, the "gain" is only sqrt(2^dpbit)

So even if reaching a DP without path is faster, the gain is not enough to beat path.

|

|

|

|

|

brainless

Member

Offline Offline

Activity: 316

Merit: 34

|

|

June 16, 2020, 09:51:06 AM |

|

Unfortunately I don't see how to reuse the work file on a different range.

This work file is for [2^114,2^115-1].

If you translate the kangaroos, the paths will differ so you have to redo all the job.

There was an interesting question in a previous message of this topic:

Why paths are important and why not adding DP without computing paths ?

Using only DP:

It is like drawing a random number in a reduced space N/2^dpbit so time to solve will be O( sqrt(N/2^dpbit) )

However, it is true, that you can reach a DP faster in this way by computing consecutive points.

Using paths:

It is like drawing a bunch of 2^dpbit random points at each DP, so time to solve is O( sqrt(N)/2^dpbit )

The "gain" using path is 2^dpbit while without paths, the "gain" is only sqrt(2^dpbit)

So even if reaching a DP without path is faster, the gain is not enough to beat path.

" I don't see how to reuse the work file on a different range. This work file is for [2^114,2^115-1]." no different range, same range [2^114,2^115-1] and pubkey of this same range too, then ? in short if an other pubkey is of range [2^114,2^115-1] as workfile already for this[2^114,2^115-1] range |

13sXkWqtivcMtNGQpskD78iqsgVy9hcHLF

|

|

|

arulbero

Legendary

Offline Offline

Activity: 1914

Merit: 2071

|

|

June 16, 2020, 10:19:51 AM

Last edit: June 16, 2020, 12:24:13 PM by arulbero |

|

Unfortunately I don't see how to reuse the work file on a different range.

This work file is for [2^114,2^115-1]

If you translate the kangaroos, the paths will differ so you have to redo all the job.

You can (see here -> https://bitcointalk.org/index.php?topic=5244940.msg54546770#msg54546770, it is enough to map the new task [1, 2^119 - 1] to the previous one [1, 2^114 - 1]) but it's not worth it. You have computed about 2^58.36 steps. And you know now the private keys of 2^33.36 DPs are in the [1, 2^114 - 1] interval (why you said [2^114,2^115-1]? you know only one public key P is in [2^114, 2^115 - 1] range, all the public keys/points you have computed lie in [1, 2^114 - 1]) In theory, to have 50% to get a collision in the #120 search, if you reuse the 300GB of DPs you need to do other 2^61.70 steps (only wild). If you restart instead from scratch, you need to do about 2^61 steps. |

|

|

|

|

|